💥When should you start to sell Nvidia?💥

🛑 Is the stock at its peak? 5 flashing warning signs to watch 🛑

NVDA seems to be the easiest way to play the AI theme. Without NVDA’s GPUs (they have 95% market share), one won’t be able to train the large language models (LLMs). It’s the BEST way to play AI.

Even on its most recent earnings call in Nov 2024, CEO Jensen Huang said supply constraint will continue for several quarters. Even though NVDA is placing an increasing amount of wafer orders at TSMC (Taiwan Semiconductor Manufacturing Company - they manufacture the chips designed by NVDA) and data center revenue more than doubled since a year ago from $15B each quarter to $31B each quarter, supply still can’t keep up with demand.

Continued supply constraint also means that pricing will remain healthy for NVDA, at least for now.

However, in the semiconductor ecosystem, when things do turn and demand slows down, correction can happen very fast. The worst nightmare is when a semiconductor company’s customers have too much chip inventory at hand.

Since Nvidia plans to introduce new generation of GPU with better performance every year instead of every 2 years, the product cycle for NVDA’s GPU has shortened from 2-year to 1-year. The risk of obsolescence for older generation of GPUs also increases.

When things crash in the semiconductor space, they crash hard and they crash fast.

This is what happened during the crypto bubble in 2018 when NVDA’s stock price got crushed and went down from $7 at peak in September to $3.6 in December (we will discuss this backstory more in detail at the end of this article in No.5 warning sign).

In investing, it’s critical to be cautious when everyone is so bullish about one stock. Although I am not saying that stock price will crash in the next quarter or two, and I agree that NVDA remains the best way to play AI in the LONG RUN, I do want to analyze when you should start to sell Nvidia and manage the risk in the near term if you have an oversized exposure.

I will analyze the 💥5 flashing warning signs💥both externally and internally that you should watch out for.

No.1 Cloud Capex Growth.

The No.1 thing to watch for is cloud hyperscalers’s (Microsoft, Google, Amazon) capex result and guidance.

When analyzing demand sustainability, the most important question is - who is spending all this money on GPUs and AI servers?

The most obvious ones are cloud hyperscalers including Amazon, Microsoft, Google, etc. Enterprise customers including large financial institutions and pharmaceutical companies are also spending on GPUs to do artificial intelligence on-premise due to privacy and compliance concerns.

For the majority of small medium businesses, they don’t have the capability to procure their own IT infrastructure, so they mostly use cloud service providers for AI.

If you have been following the capital expenditure numbers reported by the big 3 CSPs (Cloud Service Providers), you will notice the big spikes in spending:

Microsoft’s capital expenditure tripled in 2 years from $5-6B per quarter pre AI to $13-15B each quarter.

If Microsoft’s internal teams realized that despite all the money poured on GPUs, they can’t generate enough returns on investments to justify such spend, they will likely have to take a pause and re-evaluate their investment plans.

If all major customers decide to take a pause, this will have a domino effect on NVDA.

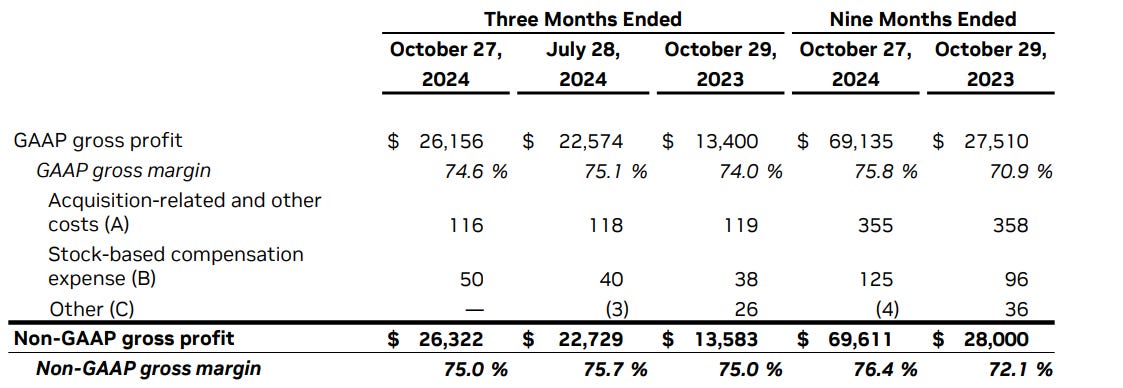

Right now due to supply constraints, customers are waiting in line to get NVDA’s GPUs. NVDA is in a dominant position to charge a high price for their GPUs. Recall that NVDA’s gross margin is ~75%, which is higher than most of the semiconductor and hardware company and even higher than its own customer Microsoft’s gross margin (69% in the last quarterly earnings result), when arguably MSFT should have a better margin profile due to its business model (software vs semiconductor/hardware).

For every $30,000 price tag that is charged for one Hopper chip, NVDA is making more than $21,000 in gross profit dollars! Before the AI boom, NVDA’s GM% is in the ~60% range. That 10% bump in GM% can be pretty arbitrary - driven by NVDA’s pricing power, aka the supply demand power dynamics.

If demand suddenly weakens and NVDA has to clean all the chip inventory, we could see a double whammy to the company - lower GPU volume shipment and lower margin.

So far cloud capex trends are still pretty healthy. But it’s definitely the No.1 thing I will watch out for.

No.2 Return on Investment for Cloud Service Providers and Startups

The No.2 thing to watch is the ROI (Return on Investment) and monetization progress for cloud companies and startups.

To predict the capital expenditure trends for Cloud Service Providers (CSPs) as mentioned above, one way is to think as a CSP’s CFO, who ultimately makes the capital allocation decisions. If you are in Amy Wood’s seat, you have to evaluate the return on investments, i.e. how much incremental revenue (AI or non AI) you are generating from investing in AI infrastructure.

How much copilot revenue are you generating each year? How much extra capex are you putting in place? Are you seeing an improvement in your operating margin thanks to higher pricing charged for Office 365 with Copilot capabilities?

In the most recent MSFT earnings call, CFO said:

“Azure and other cloud services revenue grew 33% and 34% in constant currency… Azure growth included roughly 12 points from AI services similar to last quarter. Demand continues to be higher than our available capacity.”

If you run a quick math, MSFT’s intelligent cloud revenue was $20B in Sept-Quarter 2023. 12% growth translates to $2.4B.

If we take MSFT’s corporate OPM% at 46% and tax rate of 18.5% and apply them to the $2.4B incremental cloud revenue from AI, we can get net operating profit after tax (NOPAT) of $2.4B x 46% x (1-18.5%) = $900M.

The incremental quarterly capex is $14B (current run rate) - $6B (pre AI run rate) = $8B

Return on Invested Capital or ROIC = $900M / $8B = 11.3%. It’s not that high compared to MSFT’s current ROIC, but also not too terrible. Some AI bets will also take longer to play out and we are taking a conservative view by only counting their reported “AI services revenue.”

The CSPs’ ROIC is a good metric to monitor. If you notice either AI investment’s ROIC or the corporate average ROIC start to decline, it makes sense to wonder if it’s time to take a pause and reevaluate.

Besides large cloud service providers, we also need to monitor the success and survival of the AI startups. Many companies are now branding themselves as AI startups to get funding from Venture Capital firms.

Since they don’t have the team and expertise to build AI infra themselves to train the large language models, most of them rely heavily on the LLMs provided by companies such as OpenAI that invest in GPUs.

However, if we don’t see the use cases play out for these AI startups (legal AI etc) and if these AI startups fail to generate sufficient revenue to cover the expenses, then we could see reduced investments in Large Language Model development and AI infrastructure buildout.

No.3 Competitive Landscape (AMD and ROCm)

The No.3 thing to watch is AMD’s data center revenue results and guidance.

Currently NVDA has 95%+ of the GPU market. They just reported $30.8B data center revenue in the Oct-Q 2024. Some of that is networking, and GPU revenue is about ~$28B. Meanwhile, AMD’s total GPU revenue target for the full year 2024 is only ~$5B and we estimate the quarterly revenue right now is about $1.2B (i.e. $4.8B annual run rate).

AMD has only 4% of the total market. NVDA has 95%+ market share and that’s why they can do 75% GM%. Could this change?

I am less concerned about AMD’s GPU catching up and taking significant shares from NVDA than customers using AMD as a second source to put pressure on NVDA’s GPU price.

One advantage that NVDA has over AMD is its CUDA architecture - Compute Unified Device Architecture - that allows developers to write code to use GPUs. NVDA started CUDA in 2007 whereas AMD only started ROCm in 2016, almost 10 years later than NVDA.

It is a virtuous cycle for NVDA and it now has a significantly larger developer base. The more developers use GPUs and write code designed for NVDA’s own GPU, the the optimized the CUDA ecosystem becomes. The better the CUDA ecosystem, the more developers want to buy NVDA’s GPU to handle data processing and machine learning workloads.

Although AMD is trying to catch up, this software moat is hard to challenge. We will write more about CUDA and the other important bets Jensen Huang made to solidify NVDA’s No.1 position in future articles (including Mellanox etc).

AMD’s data center revenue growth on a quarter over quarter basis hasn’t accelerated (growing around 20% or so quarter over quarter or qoq) vs NVDA’s 22% qoq growth in data center compute in Oct-Q despite NVDA has a much larger law of number to combat.

If we start to see AMD’s data center revenue accelerate while NVDA’s data center revenue growth slows, that could be a sign that AMD is slowly catching up. But for now, that doesn’t seem a big concern.

No.4 NVDA’s Gross Margin

As I mentioned earlier, because of the incredibly strong demand, NVDA can charge pretty much how much they want to customers.

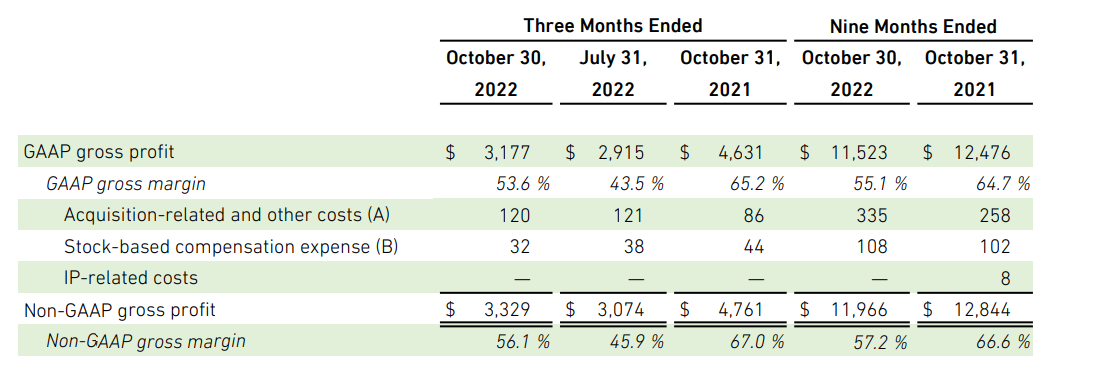

Before the AI boom, their non-GAAP gross margin is in the 45-65% range.

Since the AI boom, its GM% is pretty stable at the 75% range.

GM% is a direct reflection of a company’s pricing power against its customers. When you offer enough value to customers (in semiconductor term, its is Performance / Total Cost of Ownership or TCO), they are willing to pay a premium.

But if competitors such as AMD is able to offer a comparable performance at a lower price, then NVDA will have to lower their price to win customers. Currently NVDA holds a leading position in this battle, mostly because NVDA started investing in GPU way earlier than AMD.

CFO at the most recent earnings call mentioned that they will see gross margin dip a bit to low 70s with the new Blackwell launch but GM% will start to recover back to ~75% in second half 2025.

This is quite common with new product launch as initial yields on the chips are likely not the highest and NVDA has to share some of that costs with its manufacturing partner - TSMC. We will need to monitor if NVDA’s GM% rebounds faster or slower than the timeline offered by management team.

No.5 Prior Generation GPU’s Utilization Rate and Price

NVDA’s prior generation GPU’s pricing and utilization rate is also a good leading indicator for the company’s financial health.

In late 2022, NVDA launched Hopper architecture, and currently the majority of its GPUs shipped are based on Hopper. The next generation is called Blackwell, which they will start to ship in high volume at the beginning of 2025.

If customer demand weakens, they will likely not cut their Blackwell orders first given the lead times are generally much longer for the new products (lead times are the times it takes to complete the manufacturing of a chip).

Let’s use a metaphor. You and your friend are waiting in two lines to get tickets for two Broadway shows. But later you and your friends are thinking about doing a dinner instead of watching a Broadway show. If you have to give up your position in the line for one of the two shows, you likely will give up the your position in the shorter line / the less popular show.

As a result, any weakening demand will not get reflected by Blackwell demand first. It will be reflected through Hopper demand and Hopper pricing.

The change in demand for Hopper will be reflected in several aspects: orders, utilization rate and price. If customers have enough Hopper GPUs on hand, then the utilization rate will drop first, meaning the % of GPUs actively being deployed will drop first. Then they will cut new orders. And eventually NVDA will have to drop the Hopper price to clear the inventories.

This is exactly what happened back in the Crypto bubble days in late 2018. People had been buying a lot of Pascal GPU, an older architecture GPU, and use them for crypto mining. When crypto price plunged, the demand suddenly dropped and NVDA had too much Pascal inventory on hand, in front of their new product launch of Turing.

They had to aggressively cut the prices of Pascal based GPU in order to clean the inventory, so that customers can purchase the newly launched Turing chips.

Although NVDA might not directly share the pricing information for Hopper GPU to the public, but they do occasionally make comments on the earnings calls about supply constraints. One important question to ask is if those supply constraints are limited to the new Blackwell chips, or is Hopper also in supply constraint?

Gross margin is also another good metric to follow as lower price will be reflected through lower gross margin %.

Other Warnings Signs

There are other emerging warning signs that we need to watch out for, such as the scaling law.

We will discuss more in future articles. Subscribe so you don’t miss the latest update!