💥What questions are Wall Street analysts asking NVDA right now? 💥

NVDA's GTC Financial Analyst Q&A Session Full Transcript

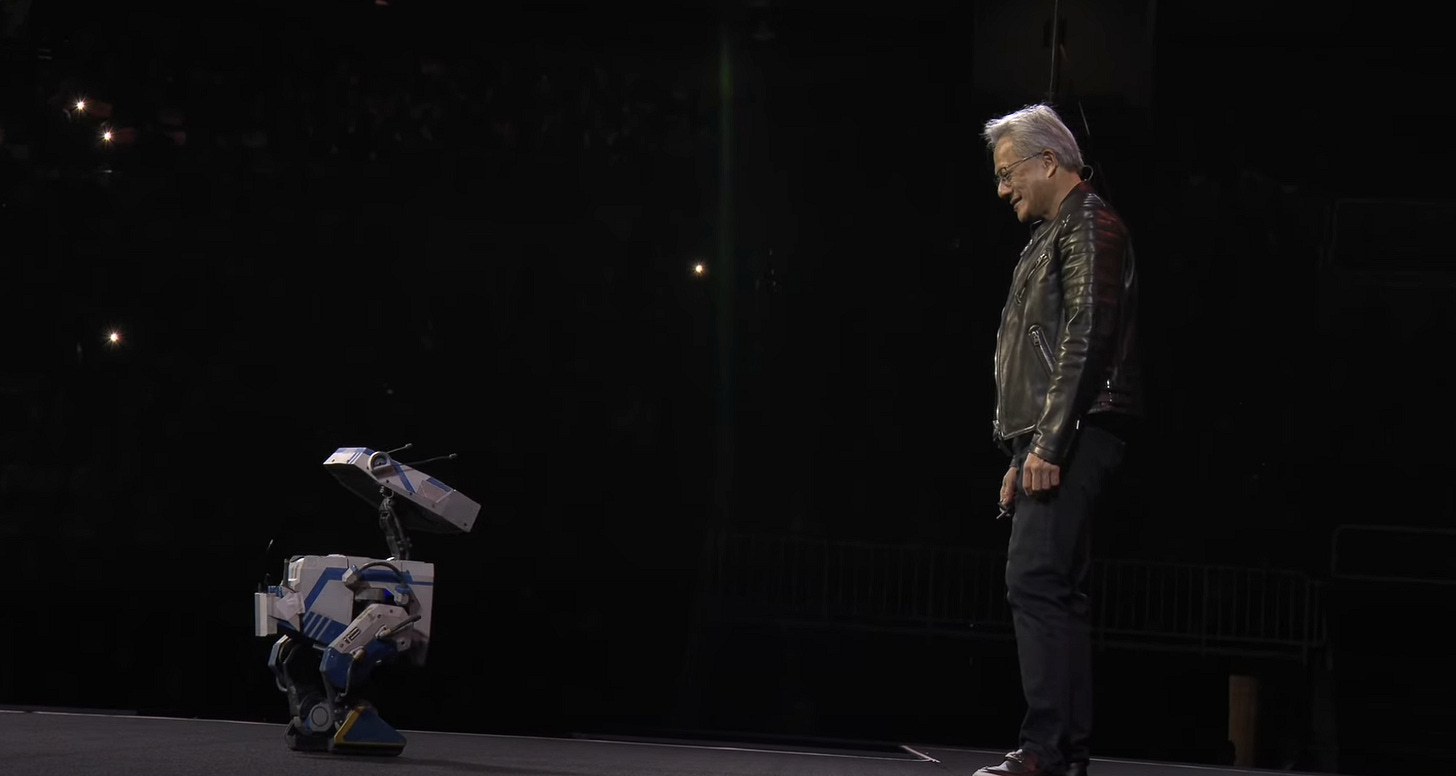

Nvidia’s annual GTC (GPU Technology Conference) is this week. CEO Jensen Huang delivered a super impressive keynote yesterday. Link to the keynote here: https://www.nvidia.com/gtc/keynote/?regcode=no-ncid&ncid=no-ncid

Jensen has great vision and did a great job showcasing Nvidia’s product roadmap in the coming years. However, for today’s post, I want to focus on the Financial Analyst Q&A session. If you are an investor in NVDA, you need to not only understand the company’s long term roadmap, but also the near term debate that could impact the stock price.

If you have enjoyed reading this post, please consider subscribing and sharing. Your engagement (likes, comments, sharing, restacking) is greatly appreciated. ❤️

In this one-hour Q&A session, Jensen discussed deepseek’s impact on compute demand, AI for cloud, AI for enterprise IT, AI for robotics, inferencing, cloud capex sustainability (an issue we raised earlier here), tariffs impact, and many other key topics.

So here we go:

Opening Remarks - Jensen Huang:

Good morning. Great to see all of you. Let's see. We announced a whole lot of stuff yesterday. And let me put it on perspective. The first is, as you know, everybody's expecting us to build AI infrastructure for cloud. That I think everybody knows.

And the good news is that the understanding of R1 was completely wrong. That's the good news. And the reason for that is because reasoning should be a fantastic new breakthrough. Reasoning includes better answers, which makes AI more useful; solving more problems, which expands the reach of AI; and of course, from a technology perspective requires a lot more computation. And so, the computation demand for reasoning AIs is much, much higher than the computation demand of one shot pre-trained AI. And so I think everybody now has a better understanding of that. That's number one.

Number two. And so, the first thing is inference, Blackwell, incredibly good at it. Building out AI clouds. The investments of all the AI clouds continues to be very, very high. The demand for computing continues to be extremely high, okay. So I think that that's the first part. The part that I think people are starting to learn about, and we announced yesterday, and I'll just take – I could have done a better job explaining it. And so, I'm going to do it again.

In order to bring AI to the world's enterprise, we have to first recognize that AI has reinvented the entire computing stack. And if so, all of the data centers and all the computers in the world's enterprises are obviously out of date. And so, just as we've been re-modernizing the world's AI clouds, all the world's clouds for AI, it's sensible we're going to have to re rack, if you will, reinstall, modernize, whatever words, the world's enterprise IT. And doing so is not just about a computer, but you have to reinvent computing, networking and storage.

And so, I didn't give it very much time yesterday because we had so much content that because that that part, which represents about half of the world's CapEx enterprise IT representing about half of the world's CapEx, that half needs to be reinvented. And our journey begins now. Our partnership with Dell and HPE, and this morning, the reason why Chuck Robbins and I were on CNBC together is to talk about this reinvention of enterprise IT. And Cisco is going to be an NVIDIA networking partner. I announced yesterday, basically the entire world storage companies have signed on to be NVIDIA's storage technology and storage platform partner.

And of course, as you know, computing is an area that we've been working on for a long time, including building some new modular systems that are much more enterprise-friendly. And so, we announced that yesterday. Spark – DGX Spark, DGX Station, and all of the different Blackwell systems that will be coming from the OEMs. Okay. So, that's second. So, now, we're building AI infrastructure not just for cloud, but we're building AI infrastructure for the world's enterprise IT.

And the third is robotics. When we talk about robotics, people think robots. And this is a great thing. It's fantastic. There's nothing wrong with that. The world's tens of millions of workers short. We need lots and lots of robots. However, don't forget the business opportunity is well upstream of the robot. Before you have a robot, you have to create the AI for the robot. Before you have a chatbot, you have to create the AI for the chatbot. That chatbot is just the last end of it. And so, in order for us to enable the world's robotics industry, upstream is a bunch of AI infrastructure we have to go create to teach the robot how to be a robot. Now, teaching a robot how to be a robot is much harder than in fact even chatbots. For obvious reasons, it has to manipulate physical things and it has to understand the world physically. And so, we have to invent new technologies for that. The amount of data you have to train with is gigantic. It's not words. It's video. It's not just words and numbers. It's video and physical interactions, cause and effects, physics. And so, so that new adventure we've been on for several years, and now it's starting to grow quite fast. Inside our robotics business includes self-driving cars, humanoid robotics, robotic factories, robotic warehouses, lots and lots of robotic things. That business is already many billions of dollars, is at least $5 billion today, the automotive industry. And it's growing quite fast, okay. And yesterday, we also announced the big partnership with GM who's going to be working with us across all of these different areas. And so, we now have three AI infrastructure focuses, if you will: cloud data centers, enterprise IT, and robotic systems.

I would say those three buckets I've talked about – I talked about those things yesterday. And then foundationally, of course, we spoke about the different parts of the technology, pre-training and how that works, and how pre-training works for reasoning AIs, how pre-training works for robotic AI, how reasoning inference impacts computing and therefore directly how it impacts our business. And then, answering a very big question that a lot of people seem to have and I never understood why, which is how important is inference to NVIDIA. Every time you interact with a chatbot and you interact with AI on your phone, you're talking to NVIDIA GPUs in the cloud. We're doing inference. The vast majority of the world's inference is on NVIDIA today. And it is extremely hard problem. Inferencing is a very difficult computing problem, especially at the scale that we do. And so, I spoke about inferencing technology. But at the technology level, I spoke about that. But at the industrial level, at the business level, I spoke about AI infrastructure for cloud, AI infrastructure for enterprise IT, AI infrastructure for robotics, okay. So I'll just leave with that. Thank you.

Question – Ben Reitzes:

Hi. It's Ben Reitzes with Melius Research. Thank you for having us. This is obviously a lot of fun. I said that at the last meeting. And Jensen, I was thinking about this question quite a bit. I want to ask a big question – a big picture question about TAM. You talked about your share of data center spend last year or data center spend as your TAM. And your share was about 25%, 30% last year. You used the Dell'Oro forecast. They go to $1 trillion, but that's about a 20% CAGR. So, the Street here has your data center growing about 60%, but then slowing to the rate of 20% thereafter. But I'm just thinking like you're in all these areas that are growing faster than the market. You're at a 25-ish percent share of the overall data center spend. I'm thinking of Dell'Oro. I doubt they have robotics and AV data center infrastructure in there. So, my question is, with that backdrop, why wouldn't your share of data center spend go up over a three to five-year period versus this 25%? And why is that CAGR actually not low that Dell'Oro that you put up there, it doesn't seem like autonomous and robots are in there. So, why wouldn't your share go up?

Answer – Jensen Huang:

Excellent question. Yesterday, as I was explaining it, remember, I said two things. I said one dynamic is that the world is moving from the general purpose computing platform to GPU accelerated computing platform. And so, that transition from – that phase shift, that transition from platform shift from one to the other is going to whatever the CapEx of the world, it is very, very certain that our percentage of that's going to be much higher going forward. It used to be 100% general purpose computing, 0% accelerated computing, but it is well-known now that out of $1 trillion, the vast majority of it would be for accelerated computing. That's number one. So, whatever the forecast people have for data centers, I think NVIDIA's proportion of that is going to be quite large. And we're not just building a chip, we're building networkings and switches and we're basically building systems components for the world's enterprise – for the world's data center. So, that's number one.

The second thing that I said that nobody's got right, none of these forecasts has this concept of AI factories. Are you guys following me? It's not a multipurpose data center. It's a single function AI factory. And these GPU clouds and Stargates and so on and so forth, okay. These AI factories are not accounted. Do you guys understand? Because nobody knows how to go do that. And these multiple hundred-billion-dollar CapEx projects that are coming online, it's not part of somebody's data center forecast. Are you guys following me? How could they possibly know about these things? We're inventing them as we speak. And so, I think there's two ideas here. And I didn't want to be too prescriptive in doing so because nobody knows exactly. But here's some fundamental things that I do know. I fundamentally believe that accelerated computing is the way forward.

And so, if you're building a data center and it's not filled with accelerated computing systems, you're building it wrong. And our partnership with Cisco and Dell and HPE, and all these enterprise IT gear, that's what that's about, okay. And so, that's number one. Number two, AI factories. GM's going to have AI factories. Tesla already has AI factories. Just as they have car factories, they have AI factories to power those cars. Every company that has factories will have an AI factory with it. Every company that has warehouses will have AI factories with it.

Every company who has stores will have AI factories for those stores to build the intelligence to operate the stores. And that store, of course, as you know, is an e-tail store. There's an AI that runs Amazon's e-tail store. There's an AI that runs Walmart's digital store. In the future, there's going to be AI that runs also the physical store. A lot of the systems will be AI and the systems inside – well, physical systems, even robotics will be AI-driven. And so, I think those parts of the world are just simply not quantified. Does that make sense? No analyst has figured out that. Not yet. It will be common sense here pretty soon. There's no question in my mind that out of $120 trillion global industries, that a very large part of that, trillions of dollars, trillions of dollars of it will be AI factories. There's no question in my mind now. Just as today, manufacturing energy, manufacturing energy, they're invisible too. Manufacturing energy is an entire industry. Manufacturing intelligence will be an entire industry. And we are, of course, the factories of that. And so, that layer has not been properly quantified. And it's the largest layer of what we do. It is the largest layer of what we do by far. But we are still going to, in the process, revolutionize how data centers are built, and it will be 100% accelerated. I am absolutely certain, before the end of the decade, 100% of the world's data centers will be accelerated.

Question - C. J. Muse:

Hey good morning. C. J. Muse from Cantor. Thank you for hosting this morning. One of the key messages yesterday was inference scaling was – are actually accelerating, led by test-time scaling for enhanced reasoning. So, my question, how does the work NVIDIA is doing to push the inference Pareto frontier impact how you think about the relative sizing of the inference market, your competitive positioning? And then could you discuss Dynamo briefly? Is there a way to isolate the productivity gains from this optimization software that we should be thinking about? Thanks so much.

Answer – Jensen Huang:

Yeah. Really appreciate it. Going backwards, as you know, people say NVIDIA's position is so strong because our software stack is so strong. And our training components in it, it's not just one thing because training is a distributor problem. And the computing stack, our software runs on the GPU, it runs on the CPU, it runs in the NICs. It runs on the switches. It runs all over the place. And so, you have to figure out which framework, which library, which systems to integrate it all into. And because no one company in the world develops the software holistically and totally for training, we have to break it up into a whole lot of parts and integrate it into a whole lot of systems, which is the reason also why our capability is so respected because it seems like everywhere you look, there's another piece of NVIDIA software. And that is true.

Now, inference is software scaling at a level that nobody has ever done before. Inference is enormously complicated. And the reason, as I was trying to explain yesterday to give it some texture, some color that this is the extreme – the world's extreme computing problem because everything matters. Everything matters. And it's a supercomputing problem where everything matters. And that's quite rare. Supercomputers and supercomputing applications are used by one person. It's not used by millions of people at the same time. And everybody cares. And the answer matters. And so, so the amount of software we have to develop for that is quite large. And we put it under the umbrella called Dynamo. It's got a lot of pieces of technology in it. The benefit of Dynamo ultimately is, without it – just as without all of NVIDIA training software, how do you even do it?

And so, the first part is it enables you to do it at scale. And one of the really innovative AI company – there's a company called Perplexity and they find incredible value in Dynamo and the work that we do together. And the reason for that is because they are serving AI at very large scale. And so, anyways, that's Dynamo. The benefit to it measurably is hard exactly how many X factors, but it's less than 10, it's probably less than 10.

On the one hand, in terms of once you – if you do a good job versus not do a good job. But if you don't have it, you don't do it at all, okay. So, it's essential. Hard to put an X factor on it. With respect to inference, here's the thing. Reasoning generates a whole lot more tokens. The way reasoning works, the AI is just talking to itself. Do you guys understand what I'm talking about? It's thinking. You're just talking to yourself. And on the one hand, the reason why we – we used to think that 20 tokens per second was good enough for chatbots. It's because a human can't read that much faster anyways. So, what's the point of inferencing much faster than 20 tokens per second if the human on the other side of it can't read it. But thinking – the AI can think incredibly fast. And so, we're now certain that we want the performance of inference to be extremely high so that the AI can think some of it out loud, most of it to itself. And so, we now know that's likely the way that AI is going to work. Internal thinking, out loud thinking, and then final answer production. And then interactive part of it, which is getting more color, getting more explanation, so and so forth.

But the thinking part is no longer one shot. And the difference between thinking versus not thinking is like just a kneejerk reaction of an answer.

The number of tokens versus thinking tokens, it's at least 100 times. The point of putting a number on it was almost – I just couldn't – I just put an arrow on it because I know that there's no way you could put an answer on how a simple – and yet, as you know, people love simpler answers. And you guys know that I always have a hard time with that because it always seems more complicated in my head. But I think 100x is easily so, most likely, nearly all the time 100x. Now, here's the part that is the X factor on top of that. This is the part that people don't consider. You now have to generate a lot more tokens, but you have to generate it way faster. And the reason for that is nobody wants to wait until you're done thinking. And it's still an Internet service. And the quality of the service and the engagement of the service has something to do with the response time of the service, just like search. If it takes too long and it's measurably so, if it takes too long, people just give up on it. They don't want to come back. They won't use it.

And so, now we have to take this problem where we're producing, we're using a larger model because it's more reasoning, more capable. It also produces a lot more tokens. And we have to do it lot faster. So, how much more computation do you need, which is the reason why Blackwell's – it came just in time. Grace Blackwell with NVLink72, FP4, better quantization, the fast memory on Grace. Every single part of the architecture people now go, man, how did you guys realize all that? How did you guys reason through all of that ?

And how did you guys get all that ready? But now, Grace Blackwell, NVLink72 came just in time. And I still think that 40x that Grace Blackwell provides is a big boost over Hopper. But I still think, unfortunately, it's many orders of magnitude short. But that's a good thing. We should be chasing the technology for hopefully– hopefully we'll be chasing the technology for a decade, if not more.

Question – Stacy A. Rasgon:

Great. Thanks. Stacy Rasgon at Bernstein Research. What I did want to ask you about was the chart you showed yesterday, the Hopper traction versus the Blackwell traction. And it showed – I think it was 1.3 million Hopper shipments for calendar 2024. You talked about 3.6 million Blackwell GPUs. I guess, that's 1.8 million chips because it's a two-for-one. How do I interpret that chart because, like, 1.8 Blackwell chips would be, I don't know, $50 billion, $60 billion, $70 billion worth tracking. So, it was great, but that seems like a lot. So, like, can you maybe just describe what that chart was actually trying to tell us, and how to interpret it, and what the read for it is for the rest of the year?

Answer – Jensen Huang:

Yeah. I really appreciate that. This is one of those things where, Stacy, I was arguing with myself whether to do it or not. And here's the question I was hoping to answer.

R1 came, the amount of compute that you need is like gone to zero. And the CSPs, they're going to cut back on. And then there was rumors of somebody cancelling. It was so noisy. But I know exactly what's happening inside the system. Inside the system, the amount of computation we need has just exploded. Inside the system, everybody is dying for their compute. They're trying to rip it out of everybody's hands. And I didn't know exactly how to go and do that without just giving a forecast. And so, what I did was I – the people that were asking about were just the top four CSPs really. Those are the CapEx that everybody kind of monitors. And so, I just took the top four CSPs and I compared it year-to-year, and I obviously therefore underrepresented the demand, okay. I understand that.

But I'm just simply trying to show that the top four CSPs are fully invested. They have lots of Blackwells coming. And the CapEx, the capital investment that they're making is solid. Here's the things that I didn't include that obviously are quite large. The Internet services that are − they're not public clouds, but they're Internet services, for example, X and Meta and I'd include any of that. I obviously didn't include enterprise car companies and car AI factories and I didn't include international. I didn't include a mountain of startups that all need AI capacity. I didn't include Mistral. I didn't include SSI, TMI, all of the great companies that are out there doing AI. I didn't include any of the robotics companies. I basically didn't include anything.

And so, that I understood which then begs the question – and I was asking myself the same thing on stage, which begs the question, why did you do it? But there's so many questions about the CSPs' investments that I kind of felt like maybe I'll just get that behind us. But I hope it did. I think that the CSPs are fully invested, fully engaged, and there are two things that are driving them. One, they have to shift from general purpose computing to accelerated computing. That's the idea of building data centers full of traditional computers. It's not sensible to anybody.

And so, nobody wants to do that. And so, everybody is moving to this new way of doing computing, modern machine learning. And then second, there are all these AI factories that are just built for just one purpose only, and that's not very well characterized and well-followed by most. And that I think in the future, these specialized AI factories and that's why I call it AI factories. These specialized AI factories, that's really what the industry is going to look like someday, above this $1 trillion of data centers.

Question – Stacy A. Rasgon:

What was the number, though, that was what shipments plus orders to those four customers within the first 11 weeks of the year? That's what that 3.6 million?

Answer – Jensen Huang:

No, that 3.6 million is what they have ordered from us so far. Our demands is much greater than that, obviously.

Question – Vivek Arya:

Thank you. Good morning. Hi Jensen. Hi Colette. Vivek Arya from Bank of America Securities. Thanks for hosting a very informative event. Jensen, I had a near and sort of intermediate term question. So, on the near term, Blackwell execution and how it has – and it's an incredibly complex product, obviously, very strong demand for it. But it has pressured gross margins a lot, right. I think some growing pains were to be expected, but we have seen margins go from high 70s to low 70s. So, can you give us some confidence and assurance that as you get to Blackwell Ultra and Rubin that we should expect margins to start heading back, that there will be more profitable products versus what Blackwell has been so far? So, that's kind of the near term.

And then, as we look out into 2026, Jensen, what are the hyperscale customers telling you about their CapEx plans in general? Because there's a lot of building of infrastructure. But from the outside, we don't always get the best metrics to kind of visualize what the ROI is on these investments. So, as you look out at 2026, what's your level of confidence that their ability and desire to spend in CapEx can kind of stay on this base that we have seen the last few years? Thank you.

Answer – Jensen Huang:

Yeah. Our margins are going to improve because, as I was explaining yesterday, we changed the architecture of Hopper to Blackwell, not just the chip-to-chip name, but we changed the system architecture and the networking architecture completely. And when you change the architecture that dramatically across the system, and now we've succeeded in doing so, there are so many components that are hard to exactly quantify cost and this and that that now that it's all accumulated, it's challenging in the transition. Everybody's cost is a little higher. Everybody's new connector is a little higher. Everybody's new cable is a little higher. Everybody's new everything's a little higher. But now that we ramp up into production, we'll be able to get those yields and those costs down, okay. So, I'm very certain, I'm quite confident that yields will improve as we use this basic architecture now called Grace Blackwell, this new NVLink72 architecture. And we're going to ride this for about 3 to 3.5, 4 years, okay. And so, we have opportunities between here and now that we're ramped up to improve yield and improve gross margins. And so, that's that.

In terms of CapEx, today, we're largely focused on the CSPs. But I think very soon, starting almost now, you're starting to see evidence that in the future these AI factories will be built even independently of the CSPs. We're going to see some very large AI factory projects, hundreds of billions of dollars of AI factory projects. And that's obviously not in the CSPs. But the CSPs will still invest and they will still grow. And the reason for that is very clear. Machine learning is the present and the future. You're not going to go back to hand coding. The idea of large-scale software being – capabilities being developed by humans only as we're sitting there typing. That's almost quaint. It's cute. It's funny. But it's not going to happen long term, not at scale. And so, we now know that machine learning and accelerated computing is the path forward. I think that is a sure thing now.

The fact that every single CEO understands this. The fact that every single technology company is here, that we have partnerships from the Dells and HPEs that are very understandable to Cisco, who have now also joined us. And industries – healthcare industries here and retail here, and GM is here and car company startups to traditional, you're starting to see that people realize that this is the computing method going forward. And so, I think that data center, the part that I do know I have great confidence in is that the percentage of that purple is going to keep becoming gold. And remember that purple is compute CapEx. That has the opportunity to be 100% gold, right. And so, I think that journey is fairly certain for me now, okay. And then the rest of it is how much more additional AI factory gets built on top of that is what we'll have to discover into. But the way I reason about the fact that it's going to be significant is that every single industry in the world is going to be powered by intelligence. And every single company is going to be manufacturing intelligence. And out of that $120 trillion or so in the world, how much of it is going to be about intelligence manufacturing? Pick our favorite number, but it's measured in trillions.

Question Timothy Arcuri:

Hi. It's Tim Arcuri at UBS. Thanks. Jensen, I wanted to ask about custom ASIC. And I ask because if – we listen to some of the same CSPs that you put up on that slide. And we listen to some of the companies who are making custom ASICs. Some of the deployment numbers sound pretty big. So, I wanted to just hear your position how you're going to compete with custom ASICs, how they can possibly compete with you, and maybe how some of your conversations with these same customers would sort of form your view in terms of how competitive custom ASIC will be to you. Thanks.

Answer – Jensen Huang:

Yeah. First of all, just because something gets built doesn't mean it's great. Number two, if it's not great, all of those companies are run by great CEOs who are really good at math. And because these are AI factories and it affects your revenues, not just your costs. It affects your revenues, not just your cost. It's a different calculus. Every company only has so much power. You just got to ask them. Every single company only has so much power. And within that power, you have to maximize your revenues, not just your cost. So, this is a new game. This is not a data center game. This is AI factory game. So, when the time comes, that simple calculus as I was using yesterday, that simple math that I was showing yesterday still has to be done, which is the reason why so many projects are started and so many are not taken into production.

Because there's always a another alternative. We are the other alternative. And that alternative is excellent. Not normal excellent, as you know. Everybody's still trying to catch up the Hopper. I haven't seen a competitor Hopper yet. And here we're talking about 40x more. And so our roadmap is at the limits of what's possible. Not to mention, we're really good at it. I'm completely dedicated to it. A lot of people have a lot of businesses to do. I've got this one business to do. And we're all in on this. 35,000 people doing one job. Been doing it for a long time. The depth of capability, the scope of technology, as you saw yesterday, pretty incredible. And it's not about building a chip. It's building an AI factory. We're talking about scale up, scale out. We're talking about networking and switches and software. We're talking about systems. And these system architectures are insane.

Even the systems itself – notice, 100% of the computer industry, 100% of the computer industry has standardized on NVIDIA system. Why? Because try to build an alternative. Building the alternative is not even thinkable because look at how much investment we put into building this one. And so, even the system is hard. Well, people used to think system is just sheet metal, hardly sheet metal, 600,000 parts is hardly sheet metal. And so, all of the technology is hard. We're pushing every single dimension to the limit because we're talking about so much money. The world is going to lay down hundreds of billions of dollars of investment in the next – just a couple or two, three years. Let's do the thought experiment.

Let's say, you want to stand up a data center and you want it to be fully operational in two years' time. When do you have to place the PO on that? Today. So, let's suppose you had to place $100 billion PO on something. What architecture would you place it on? Literally, based on everything you have today, there's only one. You can't reasonably build out giant infrastructures with hundreds of billions of dollars behind it hoping to turn it back on and to get the ROIC on it unless you have the confidence that we are able to provide you. And we can provide you complete confidence and singularly so.

We're the only technology company where if I had to go place $100 billion on an AI factory, oh, that's interesting. I did. Literally the only company who's willing to place $100 billion POs across the industry to go build it out. And you guys know, that's the depth of our supply chain, and we are and we have. Give me another one that has that depth and that length. And now to the point where we've got to go and work with the supply chain upstream and downstream to prepare the world for hundreds of billions of dollars working towards trillions of dollars of AI infrastructure buildout, our partnerships with power companies and all of the cooling companies, the Vertivs, the Schneiders our partnership with BlackRock. The partnership network necessary to prepare the world to go build out trillions of dollars of AI infrastructure, that's undergoing as we speak. What architecture and what ASIC chip do you go select? That doesn't even make sense. It's a weird conversation even.

And so, I think that, one, the game is quite large, the investment level, therefore the risk level is quite high. And so, the certainty that you're selecting the best is quite important, and the certainty that you can execute, vital. We are the company you can build on top of. We're the company, we're the platform that you can build your AI infrastructure on. And we're the company that you can build your AI infrastructure strategy on. And so, I think it includes chips, but it's much, much more than that.

Question – Mark Lipacis:

Hi. Mark Lipacis from Evercore ISI. Thank you so much for the informative presentation yesterday and sharing your insights today. Jensen, you brought up the expression homogeneous cluster. Why is this concept important? Why would it be better than a heterogeneous cluster? And I think, like, an investor question would be here, like, as your customers scale to 1 million node clusters, is it your view that those will more likely be homogeneous than heterogeneous? Thank you.

Answer – Jensen Huang:

The answer to the last question, Mark, I appreciate the question, yes. But it wouldn't be my style to just leave it there because your understanding is too important to me. So, let me work on it backwards. So, GTC, I always assume that because – so, you know, the first GTC NVIDIA ever had was at the Fairmont Hotel, okay. And it's not far from here. Right next to Great America. And literally the entire GTC was this room divided in half. Literally, 100% of the audience was scientists. Because they were the only users of CUDA. Now, my recollection of GTC and what GTC means to me is still that. Whole bunch of computer scientists, whole bunch of scientists, whole bunch of computer engineers, and we're all building this computer, computing platform.

And so, I've always somehow had in my head that my GTCs talks could be nerdier than usual. And so, I show some charts that no CEOs would or should. And so, if you saw the Pareto frontier, the frontier simply is a way of saying underneath that curve in our simulation was hundreds of thousands of other dots. Meaning, that data center, that factory, depending on the workload. Depending on the workload and depending on the work style, the prompt style. Remember, a prompt is how you program a computer now. And therefore, every time you prompted differently, you're actually programming the computer differently. And therefore, it process it differently. And so, depending on your style of prompting and deep research or just a simple chat or search, depending on that spectrum of questioning or prompting, depending on whether it's agentic or not, okay, depending on all of that, the data centers configuration is different.

And the way you configure it is using a software program we call Dynamo. It's kind of like a compiler, but it's a runtime. And it sets up the computer to be parallelizable in different ways. Sometimes it's Tensor Parallel, Pipeline Parallel, Expert Parallel. Sometimes you want to put the computer to do more floating point. Sometimes you want to use it to do more token generation, which is more bandwidth-challenged. And all of this is happening at the same time. So, if every single computer has a different behavior or if every computer has a different programming model, the person who's developing this thing called Dynamo would just go insane. And the computer would be underutilized because you never know exactly what is needed. It's kind of like in the morning you need more of this; in the night, you need more of that. And so, if everything was fungible, then you don't care, which is the reason why homogeneous is better.

Every single computer is fungible. It could be literally – from Hopper on, every single computer could be used for context processing or decode from pre-fill to decode, from a Tensor Parallel to Expert Parallel. Every single computer could be flexibly used in that way. Running Dynamo. And so, the utilization of the data center will be higher, the performance will be higher, everything will be better, energy efficiency will be better. And if you had to do this, if this computer can only be good for a pre-fill or this computer is only good for decode or this computer is only good at Expert Parallel, it's kind of weird. Too hard.

Question – Joe Moore:

Joe Moore, Morgan Stanley. So, a very compelling conversation about reasoning and 100x improvement in compute requirements. But I guess, one of the anxieties the market had was DeepSeek talking about doing that reasoning on fairly low-end hardware, even consumer hardware and China may be forced to do this on low-end hardware. So, can you talk about that disparity and how does this complexity pan out for you guys?

Answer – Jensen Huang:

Yeah. They were talking about a technology called distillation. Distillation, you train the largest model you can, okay. So, ChatGPT-4o I think it's a couple of trillion parameters. R1 is 680. Llama 3 is 400 or so – is it 280, 400, something like that. I forget. And the version that people run mostly is 70B. So, R1 680. ChatGPT is 1.4. The next one I'm going to guess is 20 trillion. And so, you want to build the smartest AI you can. All right. Number one.

The second thing you want to do is you want to distill it, quantize it, reduce the precision, quantize it, distill it and into multiple different configurations. Some of it you might continue to run it in the largest form because, quite frankly, the largest is actually the cheapest. And let me tell you why.

As you know, Joe, there are many problems. Well, getting the smartest person to do it is the cheapest way to do it. There are actually a lot of problems like that. Getting the cheapest person to do something is not necessarily the cheapest way to get it done.

Okay. So it turns out that there are many problems where it would actually cost less in runtime to have the smartest model do it. And so, depending on the problem you're trying to solve, you're going to use the next you use the cheapest form.

And so, we take the largest and you distill it into smaller and smaller version. And even the smallest version is only like 1 billion parameters, which fits on a phone. But I surely won't use that to do research. You know what I'm saying? I would use the larger version to do research. And so, they all have a place, but it's a technology called distillation. And people just got worked up on it. And even today, you can go into ChatGPT and there's a whole bunch of configurations. Do you guys see that? Is it o3-mini or something like? It's a distilled version of o3. And if you like to use that, you can. I used the plain one. And so, it's just up to you. Does that make sense? Yeah. It's a technology called distillation. Nothing changed.

Question – Aaron Rakers:

Perfect. Thanks for taking the questions. Aaron Rakers at Wells Fargo. Jensen, we talk a lot about the compute side of the world and how these scale up architectures are evolving. One of the underlying platforms that I feel are key to your success and your strategy is NVLink. And I'm curious as you move from 72, and I know there's some definitional difference around 72 to 144. You talked about 576. I'm curious how do we think about the evolution of NVLink to support continual scaled up architectures and how important it is to your overall strategy? Thank you.

Answer – Jensen Huang:

Yeah. NVLink, I said yesterday, distributed computing. Distributed computing, it's like this room, distributed computing, let's say. And let's say we have a problem we have to work on together. It is better – you can get the job done faster if we actually have fewer people, but they were all smarter. They ought to do things faster. So you want to scale up first before you scale out. Does that make sense? We all love teamwork, but the smaller the team, the better. We all love teamwork, but the smaller the team, the better. And therefore, you want to scale up the AI before you scale out the AI. You want to scale up computing before you scale out computing. Now, scale up is very hard to do. And back in the old days, monolithic semiconductors or otherwise the beginning of Moore's Law was the only way we knew how to scale up. Does that make sense? Are you guys following me?

Just make bigger and bigger and bigger and bigger, bigger chips. At some point, we didn't know how to scale up anymore because we're at reticle limits. And that's the reason why we invented, and you didn't see – you don't remember it anymore. But back in the old days, during GTC, I was talking about this incredible SerDes we invented. The most high-speed, energy efficient SerDes. NVIDIA is world-class at building SerDes. I go so far as saying, we're the world's best at building SerDes. And if there's a new SerDes that's going to get built, we're going to build it. And so, whether it's the chip-to-chip interface SerDes or the package-to-package interface SerDes, which enabled NVLink, our SerDes is absolutely the world's best. Always at the bleeding edge. And the reason for that is because we're trying to extend beyond Moore's Law. We're trying to scale up past the limits of reticle limits.

Now, the question is, how do you do that? People talk about silicon photonics. There's a place for silicon photonics. But you should stay with copper as long as you can. I'm in love with copper. Copper is good. It's time-tested. It works incredibly well. And so, we want to stay with copper as long as we can. It's very reliable. It's very cost-effective, very energy-efficient. And you go to photonics when you have to. And so, that's the rule. And so, we would scale up as far as we can with copper, which is the reason why NVLink72 is in one rack, which is the reason why we pushed the world to liquid cooling. We pushed that knob probably two years earlier than anybody wanted to in the beginning, but everybody's there now, so that we could rack up, scale up NVLink to 72. And then with Kyber, our next-generation system, we can scale to 576 using copper in one rack. And so, we should scale up as far as we can and use copper as far as you can and then use silicon photonics, CPO, if necessary. And therefore, the necessary part, we've prepared the world to – we've now built a technology so in a couple of – two, three years, we could start scaling out to millions of GPUs. Does that make sense? And so, now, we can scale up to thousands, scale out to millions. Yeah. Crazy, crazy technology. Everything is at the limits of physics.

Question – Blayne Curtis:

Blayne Curtis at Jefferies. Appreciate the bonus time, too. Thanks for taking the question. I want to ask you, I know you were kind of making a joke about you couldn't give Hopper away. That's not the point. You were trying to say that performance is so incrementally better, new sales are going to go there very quickly. But I'm kind of curious about the lifecycle. You get this question a lot. I think in the last earnings call you talked about people are still using A100s, right. It's diversity of workload. But I think there was this perception that inference was the lesser workload. You clearly make the case that now you need the best systems with Dynamo. Price per token is very important. So, I just want to think about how you have seen really just greenfield deployments, but do you see people rip and replace? And if power is the constraint, when do we see that in terms of people just pulling out? What is the lifecycle of the GPU these days now that the workload on both training and inferences

Answer – Jensen Huang:

I really appreciate that question. First of all, the lifecycle of NVIDIA accelerators is the longest of anyone's. Would you guys agree? There you go. The lifecycle of NVIDIA's GPUs are the longest of any accelerators in the world. Why does that matter? It directly translates to cost. Easily, easily, three years longer, maybe four. The versatility of our architecture, you could run it for this and that, you could use it for language and images and graphics, you could use – isn't that right? All these data processing, libraries, none of them have to be at the forefront. Even using Ampere for data processing is still an order of magnitude faster than CPUs and they still have CPUs in data centers.

And so, we have a whole waterfall of applications they could put their older GPUs into and then use the latest generation for their leading edge work and use their leading edge for factory work, AI factories and such. And so, I also said something. If a chip is not better than Hopper, quite frankly, you couldn't give it away. This is the challenge of building these data centers. And the reason for that is because the cost of operation, the cost of building it up, the TCO of it, the risk of building it up, $100 billion data center, okay, which is only 2 gigawatts, by the way. Every gigawatt is about $40 billion, $50 billion to NVIDIA, right. Every gigawatt of data center is about $50 billion roughly, let's say. So, every gigawatt, $50 billion. And so, when somebody's talking about 5 gigawatts, that math is pretty clear. You've got to do all the math, as I explained yesterday. And it becomes very clear that if you're going to build that new data center, you need the world's best of all these areas.

And then once you build it up, you have to start thinking about how do you retire it someday. And then now the versatility of NVIDIA's architecture really, really kicks in. So, we are not only the world's best in technology, so your revenues will be the highest. We're also the best from a TCO perspective because operationally we're the best. And then very importantly, the lifecycle of our architecture is the longest. And so, if our lifecycle is six years instead of four, that math is pretty easy to do. My goodness, the cost difference is incredible. People are starting to come to terms with all of that, which is the reason why these very specific niche accelerators or point products are kind of hard to justify building up $100 billion data center with.

Question – Pierre Ferragu:

Thanks for the time. Pierre Ferragu, New Street Research. That's really music to my ears, this idea that NVIDIA chips have a very long lifetime and they're actually amortized relatively rapidly on balance sheets. And it really makes me think, in the future, data centers are going to have a business model that is very equivalent to the foundry business model. So, you buy super expensive equipment to manufacture chips. You depreciate 20 years down the line. And so I'm trying to think about how the industry is going to grow with that framework in mind. And I look at the economics, for instance, of OpenAI, the economics you shared with investors in the last round. And my understanding of them is in 2028-2029, they're going to be deploying like a $250 billion data center, and they're going to use it as a frontier to develop the most advanced models. But then that big data center, if they want to move to the next frontier data center, that might be like a $350 billion data center in the beginning of the next decade. They'll have to drop off somewhere a $250 billion data center, and we'll have to figure out how to fit in that data center so that the frontier of the industry can keep going towards, like, the more advanced technology. And I'm still struggling to see how this is going to work because probably the inference of OpenAI alone is not going to be enough to fit in such a big data center every year or every other year if they change their leading edge data center every year or every other year. Does that makes sense?

Answer - Jensen Huang:

Except for I don't think they're going to have any trouble. And the reason for that is this. The demand for inference will be larger than the demand for training. It already is. The number of computers they use for inference is nowhere near enough. That's the reason why as we're moving to these reasoning models and I'm super enjoying using ChatGPT, but the – and I use it every day, but the response time is getting slower. And during certain parts of the time, I can tell when you guys are all on it. I pretty much don't get my answer back. Are you guys following me? Obviously, that's a problem. And obviously, they know that. And that's the reason why they're clamoring for more capacity. They're just racing because the inference workload is just too high. Someday I believe training is only 10% of the world's of their capacity, but they need that 10% to be the fastest of the 90% – of the rest of 100%, okay. And so, every year, they're going to come up with the new state of the art. But the reason for that is because they want to stay at the leading edge. They want to have the best products. Just like NVIDIA is today.

Listen, we do everything we can to spare no expense to make sure that we have the state of the art technology. And the reason for that is because we want to stay ahead, they want to stay ahead. There are five companies out there that needs to stay ahead and everybody will invest in a necessary state of the art technology to stay ahead. But that technology, that capacity, that training capacity ideally will represent 10%, 5%, 20% of their total capacity.

Let's use an example. You used an excellent example, TSMC. TSMC is prototyping fab that they run my tape-out chips on. The latency is very – it's optimized for low latency because I need to see my chip, my silicon, my prototypes as soon as possible. The cycle time for a prototype is only, let's pick, two months, but the cycle time for production is six months. And then it's that same equipment. And so, they have a fab that is only for prototyping. But that fab represents, I don't know, 5%, 3% of their overall capacity. The rest of their capacity is used for manufacturing inference. 3% is used for.

And so, I think if you told me that in the near future, OpenAI will invest $350 billion or pick your favorite number, every single year, I completely believe it. And it's just that the trailing capacity will be used for inference. And their revenues will just have to support that. And I believe that the production of AI, the production of intelligence will support that level of scale.

Question – Brett Simpson:

Thanks. It's Brett Simpson at Arete Research. Jensen, it feels like it's a tipping point for reasoning inference at the moment, which is great to see. But a lot of folks in this room is concerned about the macro backdrop overall at the moment. They're concerned about tariffs and potentially what impact tariffs have on the sector. And maybe this leads to a US recession of some sorts. And I'd love to get your perspective and maybe also Colette's perspective, just thinking through if there is a scenario where we see a US recession, what does that do to AI demand? How do you think about the impact on your business if this comes through?

Answer – Jensen Huang:

If there's a recession, I think that the companies that are working on AI is going to shift even more investment towards AI because it's the fastest growing. And so, we will – every CEO will know what to do to shift towards what is growing. And so, second, tariffs. We're preparing and we have been preparing to manufacture onshore. And TSMC's investment in $100 billion of fabs here in Arizona. The fab that they already have, we're in it. We are now running production silicon in Arizona. And so, we will manufacture onshore. The rest of the systems we'll manufacture as much onshore as we need to. And so, I think the ability to manufacture onshore is not a concern of mine. And our partners, we are the largest customer for many companies. And so, they're excellent partners of ours. And I think everybody realize that the ability to have onshore manufacturing and a very agile supply chain, we have a super agile supply chain, we're manufacturing in so many different places, we could shift things around. Tariffs will have a little impact for us short term. Long term, we're going to have manufacturing onshore.

Jensen Huang:

How about I take one more? The last question has to be a happy question. Even though the tariffs wasn't a sad question, it was, but tariffs was a happy question. How about one more question? The ultimate responsibility, sir.

Question – William Stein:

It might not sound so much like a happy question. It's Will Stein from Truist. But I'm hoping you can put a happy spin on it. Jensen, what do you see as the biggest technical or operational challenges today? You just mentioned with regard to US production, that's not really weighing heavily on you. What is and what is the company – what are you doing to turn that into an opportunity that will result in even better revenue growth going forward?

Answer – Jensen Huang:

Yeah. Well, I really appreciate the question and, in fact, everything I do started out with a problem, right. Almost everything that I do started out with a dream where everything I do started with an irritation or everything I do started out with a concern or some problem. And so, what are the things that we did? What are the things that we're doing? Well, one of the things that you're seeing us do, no company in history has ever laid out a roadmap – a full roadmap three years out. No technology company has ever done that. It's like today we're announcing our next four phones. Are you guys following me? Nobody does that. And the reason why we do it is because, one, the whole world is counting on us and the amount of investment is so gigantic they cannot have surprises. We're in the infrastructure building business, not in the consumer products business. We're the infrastructure business. The most important thing to our partners are things like trust, no surprises, confidence in execution, confidence in your ability to build the best. They use all of the same thoughts, words that you would use to describe, for example, TSMC. Are you guys following me? All the same ideas.

We are a infrastructure building company now. And so, many of the things that you saw me do are reaction to that. And I know it looks quite strange for a technology company to sit here and tell you about all these things that, while you're sitting here buying this one, I'm already telling you about the next one and you're about to place an order for next one, I'm already telling you about the one after that forcing you to live in regret all the time, okay.

However, I also know this because they need to run their business every single day. They can't wait to run their business in three years and do a better job. They got to run it every single day. They have no choice because we're in the AI factory business. So, one, we're in the AI infrastructure business. No excuses. No surprises. Long roadmap.

Two, we're in the AI factory business. We got to make money every day. They're going to buy some every day. No matter what. No matter what I tell them about Rubin, they're going to buy Blackwells. There's no question. No matter what I tell them about Feynman – I couldn't wait to tell you guys about Feynman, but they're still going to – I thought the keynote was long enough. And so, they're still going to, right, does it make sense? We're in the AI factory business. People have to make money every day.

And then here's the third thing is we are a foundation business for so many industries. AI is a foundational business for so many industries and we have only so far served the cloud. And in order for us to serve the telecommunications network, and yesterday we announced with T-Mobile and Cisco in the US, we're going to build a 6G AI RAN and it's all completely built on top of Blackwell's. And that ecosystem, what's your CapEx spend, $100 billion a year? That has to retooled and rearchitected and reinvented. You can't just do that in the cloud. We had to do that for AI industries. You can't just do that in the cloud. We have to do that for AI enterprise IT. You can't just rely on the cloud to do that. They're different architectures, different go-to markets, different software stacks, different product configurations, different purchasing style, and therefore the product has to fit the style of purchasing. So, each one of these industries needs an AI infrastructure.

So, we now know three things about our company.

We're an infrastructure company. So, the supply chain, front and back behind us. Everything from land, power and capital, we are a part of, okay. And so, one, AI infrastructure, AI factories.

And three, we're AI foundation, foundational company that the whole world is depending on, and therefore we have to bring the technology to them. It's not just about AI to them. It's about the entire computing platform to them. And that includes networking and storage and computing. And we have the might to do that. We have the technical skills to do that. And so, you heard all these things, frankly, in the keynote yesterday while I had to also remember while we have work to do and also still had to be a little entertaining. But we did a lot of work yesterday. And we set the blueprints out for not just our company, but for the companies that are here, the industries that are here, the companies that are here, the companies that aren't here, and the associated companies before and afterwards in the supply chain.

So many companies were affected by what I said yesterday. And we're laying the foundations for all of that. And so, I want to thank all of you for coming to GTC. It's great to see all of you. This is an extraordinary moment in time. I do really appreciate the question and the comment about R1 and the misunderstanding of that. There's a profound and deep misunderstanding of it. It's actually a profoundly and deeply exciting moment, and that it's incredible that the world has moved towards reasoning AIs. But that is even then just the tip of the iceberg, and I hope to catch up with you guys again.

We're going to COMPUTEX. So I hope you're coming to COMPUTEX. This year's COMPUTEX is going to be gigantic. Okay. So we have lots and lots of things to do at COMPUTEX this year because, as you know, the ecosystem, the computing ecosystem starts there. And we got a mountain of work to do there, and so I look forward to seeing you guys there. All right, guys. Thank you.